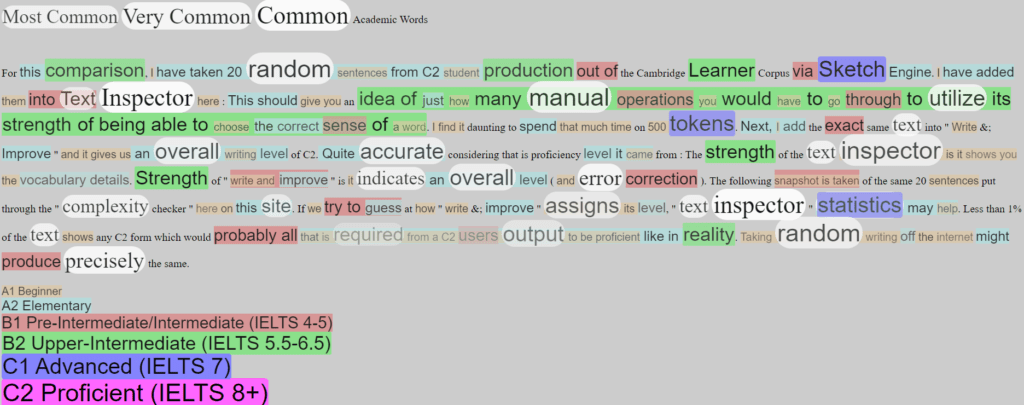

For this comparison, I have taken 20 random sentences from C2 student production out of the Cambridge Learner Corpus via Sketch Engine.

I have added them into Text Inspector here:

This should give you an idea of just how many manual operations you would have to go through to utilize its strength of being able to choose the correct sense of a word. I find it daunting to spend that much time on 500 tokens. Next, I add the exact same text into “Write & Improve” and it gives us an overall writing level of C2. Quite accurate considering that is proficiency level it came from:

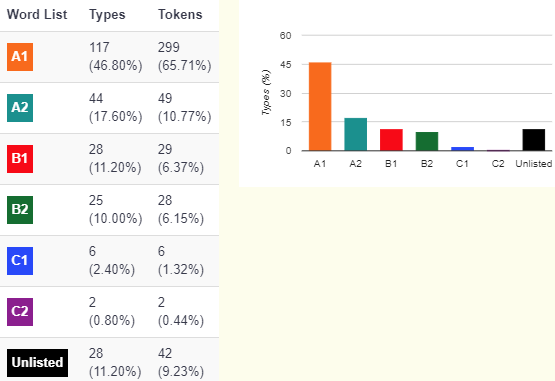

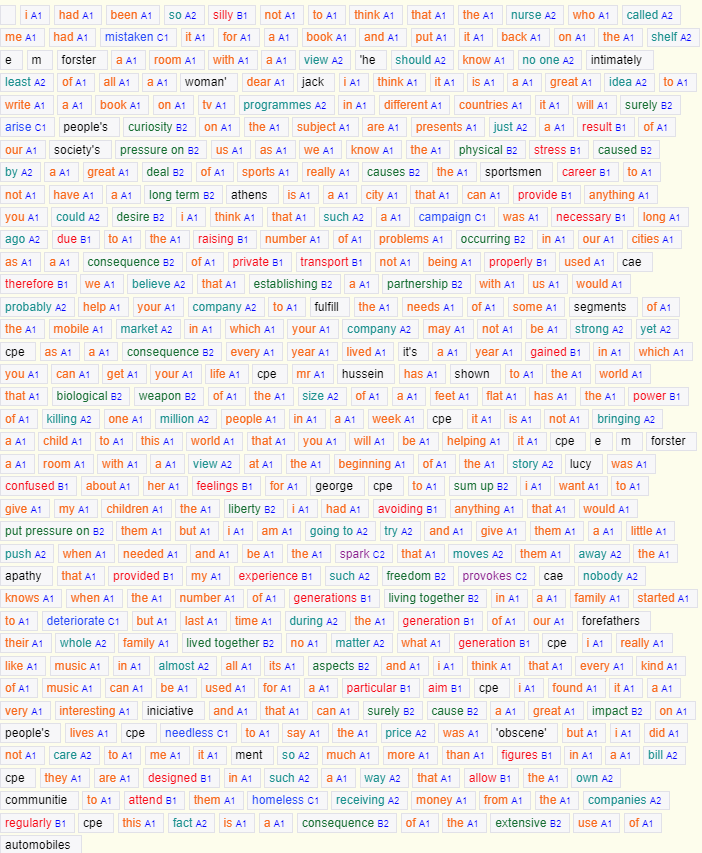

The strength of the text inspector is it shows you the vocabulary details. Strength of “write and improve” is it indicates an overall level (and error correction). The following snapshot is taken of the same 20 sentences put through the “complexity checker” here on this site.

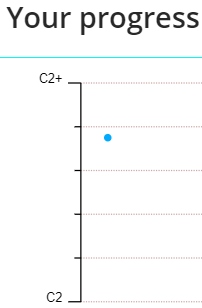

If we try to guess at how “write & improve” assigns its level, “text inspector” statistics may help. Less than 1% of the text shows any C2 form which would probably be all that is required from a C2 user’s output to be proficient like in reality. Taking random writing off the internet might produce precisely the same. Indeed the featured image for this post has no c2 grammar, has 1% C2 vocabulary and a lot of academic vocabulary. Sadly, for my own writing, I have only achieved:

This should prove that firstly, native speakers, as I am, and relatively well educated, and even writing about quite academic topics still does little to prove that I am C2. Oh well, I guess I need to actively attempt to add higher level structures just to get that C2 certification. No just kidding. It is proof that online checkers cannot consider discourse. Unrelated sentences vs sentences that are all related makes little difference.